The newest “overnight sensation” in tech talk, Artificial Intelligence (AI) is the hot topic of the day at seemingly every tech conference and discussion among CIOs. Looking back, technologists have been working to create a machine capable of learning like a human, deciding like a human, and acting based on the decisions from the learning since the mid-1950s.

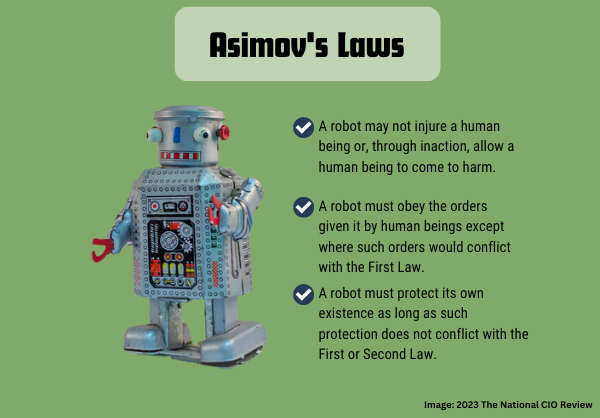

In fact, Hollywood has been scaring us with AI programming since that same time. Sci-Fi authors were only slightly ahead of movies and television, and they were making us think even before that – Isaac Asimov’s short story introducing his Three Laws of Robotics came out in 1942.

In 2023, we are “suddenly” resurrecting the Three Laws and trying to figure out how to implement a governance model or framework that will prevent the machines from doing harm to humans.

Why is this happening?

Over at Google, one of the senior technologists – Geoffrey Hinton – just resigned so he could explain to us the dangers he sees in an ungoverned continuance of building and training AI. Also at Google, we have seen reports [Google’s Artificial Intelligence Built an AI That Outperforms Any Made by Humans] of a “Child AI” entirely built by a “Mother AI” that learns faster than the mother and is trained by the mother – not by human techies. I can see a “Grandchild AI” coming soon that doesn’t interact with humans and has machine language code inaccessible to human programmers – making interventions difficult if not impossible.

In the massive, multiplayer online game (MMOG) world, we also have reports of AI players ‘coming together and cooperating” to decimate the human players in ways that show no compassion or empathy for the human players whatsoever.

Who is actually shocked by this?

It turns out that lots of people are indeed shocked! Hollywood movies and TV shows have consistently shown the “Pinocchio Version of the AI story” where the AI mimics human emotions so much and so well that it becomes “human” in its responses to the world it sees and analyzes – see Spielberg’s movie AI. The character DATA from Star Trek is another example of this, but there are many more. Even Arnold Schwarzenegger’s Terminator turned out to be a good guy protecting John and Sarah Connor from the evil, non-feeling machine overlords.

Futurist Peter Diamandis recently posted an email wherein he stated that the learning and mimicking of human responses would inevitably lead to the breakthrough of machines developing real emotions just like those of a human. When I read this last week, I immediately thought, “Could this be right?” Hollywood says. “Yes.” The technologist in me said, “Probably not.” The intense skeptic in me rose up and said, “There are many humans that think, analyze, and act without normal human emotional guidance – we call them sociopaths and psychopaths.”

These humans process information very well — some of them have remarkable IQ scores – they are just not able to access the empathy or sympathy guidance set in their behaviors. They do mimic these behaviors as a defensive mechanism, but it is not real – it is artifice or ‘artificial emotions’ as the behavioralists explain. They put on a false face to avoid being found out and excommunicated from the rest of us normal folks. What if these unemotional humans were in positions of power? Names like Mao, Hitler, and Stalin come to mind in answering that question.

Many of the signers of the “Slow down, stop the training of AI” letter have stated that THIS is the concern: A machine – one that processes well, analyzes huge data sets, and has agency (the ability to act) — might ultimately learn that humans are more squirrelly than squirrels, mostly irrational, and therefore a problem that should be eliminated. [AI poses risk of extinction, tech leaders warn in open letter. Here’s why alarm is spreading] Outcome: “Skynet is online.”

“Good people will use the tools for good purposes; bad people will use the tools for bad purposes; and both courses of action will produce unintended consequences for all the rest of us.”

Meanwhile, the rest of the normal folks are watching the announcements of job cuts from big tech companies — where the press releases [300 million jobs will be replaced, diminished by Artificial Intelligence, report warns] specifically state that the jobs are being replaced by AI technology.

A small percentage of the normal, every day, working folks may also have watched the hearings in Congress on the subject of governing AI. They heard from the experts called to testify [Congress wants to regulate AI, but it has a lot of catching up to do] that there are real concerns that need to be addressed quickly, BUT…we can’t stop what we’re doing due to market pressures and, with no recognized enforcement authority anywhere in sight the “bad guys” will continue to pursue advances in the capabilities of their creations [AI drone ‘kills’ human operator during ‘simulation’ – which US Air Force says didn’t take place] no matter what.

“Market pressures” means Microsoft sees an opportunity to take away Google’s dominance in search by adding Open AI capabilities to everything they offer. Google sees this and feels compelled to defend their turf and we get to watch the AI arms race. [The AI Arms Race Is Changing Everything] This is interesting and concerning, as we have already seen the nation-state competition over “who has the fastest supercomputer” go back and forth for years between the USA and China.

The congressional response to the panel of experts can be summarized this way, “Can one of you techie people tell me how to turn off notifications on my iPhone?” Government regulation will probably be slow to the party as they are obviously unprepared for the debate. Self-regulation by the builders of AI is the faster, better approach – similar to what the nuclear power industry did with INPO during the Carter administration – with voluntary participation and inspections/evaluations against a defined framework such as the Three Laws +. There is enough evidence that we have legitimate concerns for the builders to take such action, but the questions of who, how, and what enforcement mechanisms to use are huge problems to solve.

My Prediction

The letter signers will not see the machine builders pause, slow down, or stop. The nation-states are engaged and running fast as well. Investor money is attracted to anything “AI” and there will be no abatement from the governments to any of this.

The race is definitely on.

There is no telling the end of the story at this point – but the pattern is clear: good people will use the tools for good purposes; bad people will use the tools for bad purposes; and both courses of action will produce unintended consequences for all the rest of us.