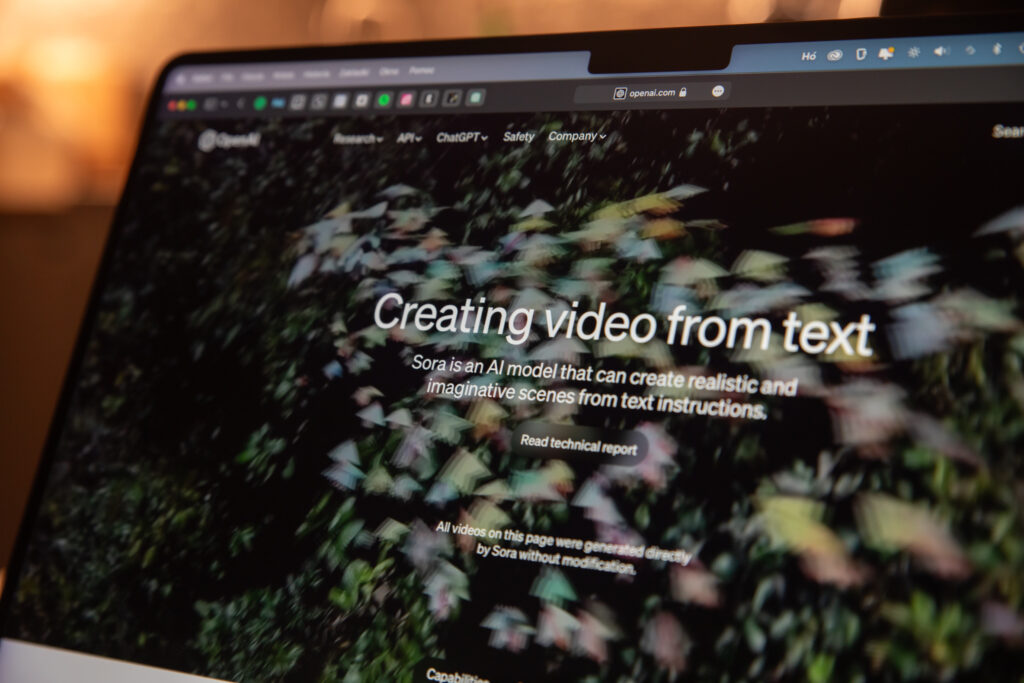

OpenAI has recently introduced Sora, a groundbreaking text-to-video generation model, setting a new benchmark in the realm of artificial intelligence. Sora is designed to transform written prompts into vivid, photorealistic videos up to a minute in length, showcasing the capability to construct complex scenes involving multiple characters, intricate motions, and detailed backgrounds.

This innovative model represents a significant leap forward from previous text-to-image generators, offering a glimpse into a future where video content creation becomes more accessible and creatively boundless.

Why it matters: Sora’s ability to generate these videos raises ethical and societal questions, particularly concerning misinformation and the blurring lines between reality and AI-generated content. OpenAI’s cautious approach to this release, involving red teamers and select creators for feedback, highlights the importance of balancing innovation with responsible development and deployment.

- With other companies like Google and Runway also developing text-to-video technologies, Sora’s entry heats up the competition and sets the stage for further innovations in AI-generated content. The focus on enhancing video quality and ethical considerations will be crucial for future developments.

- Businesses can leverage Sora to quickly produce high-quality promotional videos, enhancing their marketing efforts with captivating visual content that effectively communicates product features and benefits.

- Despite its advanced capabilities, Sora sometimes struggles with accurately simulating complex physical interactions, leading to occasional unnatural elements in videos. This underscores the ongoing need for improvements in AI’s understanding of the physical world.

OpenAI introduces Sora, its text-to-video AI model – The Verge